Chat GPT faces two distinct hurdles.

- It's too small.

- It's too big.

Allow me to explain.

Too Small

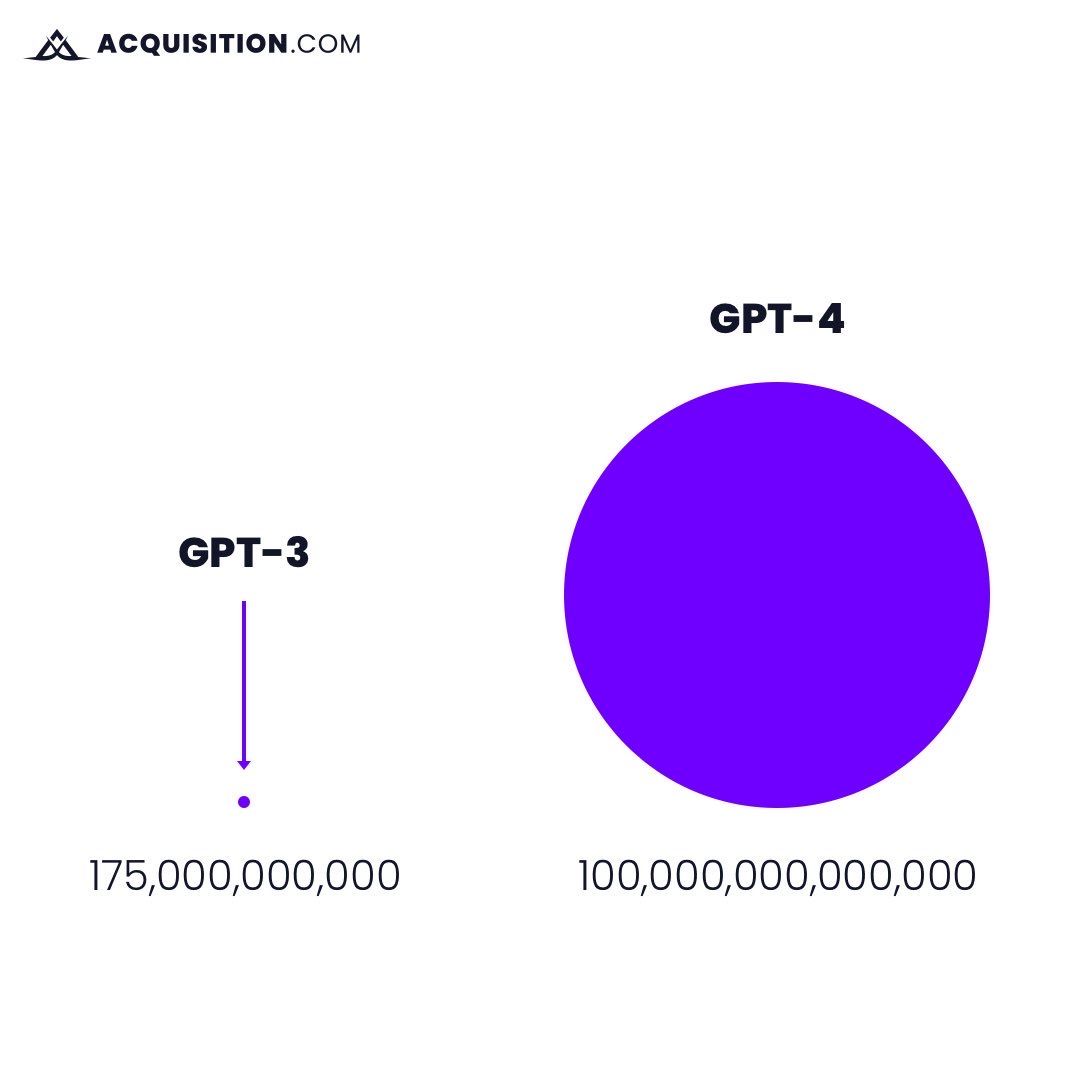

Chat GPT is too small. It needs more data. GPT-4 is an order of magnitudes larger than GPT-3. It can process 8x more words and considers 500x more parameters.

That is a huge step forward. But it is not enough.

It still lacks data on current events, as the data set cut-off is 2021. And it needs the ability to absorb data as daily events happen in near real-time.

Not only does it need to be more agile, it needs to be more accurate. It is not just imperfect, the fallibility rate is unacceptably high. To achieve its dream, it needs to be much, much bigger.

Too Big

Setting aside the legal threats of how courts will define fair use in relation to generative AI models, the biggest issue with Generative AI is the unit economics.

OpenAI and its peers aren't profitable - not even close.

- Sam Altman

Current compute costs are untenable. And although compute costs will undoubtedly decrease as the industry matures, compute costs are a secondary issue.

Many of these mainstream models were built on data acquired before the lights were turned on. That data was cheap - nearly free. But now that the lights are on, data troves like Twitter, Reddit, & Stack Overflow are fencing (and charging for) AI bots' access. Efforts to grow - i.e. GPT-5 and beyond - will be hampered by a lack of the internet's greatest natural resource: data. And if access is granted at all, scraping dozens of walled gardens for data will be infinitely more expensive, easily negating any future compute savings.

Those realities lead to an obvious conclusion. Chat GPT isn't an ad-supported industry - certainly not anytime soon. The OpenAI's of the world will need to charge users for access, thereby slowing adoption and limiting scale.

That's certainly not a recipe for replacing Google. Instead, it is more aligned with Uber - buying a spot in the market and predictably lacking sustainability.

The Future of Generative AI

So are large language models (LLMs) doomed?

I frequently tell clients that business on the internet is won in the niches. And I think that is the case with AI.

The one-stop-shop GPT-X seems infeasible for the reasons listed above. But the tech could obviously be repurposed in a million ways.

I see much broader application and adoption in the niches, with a thousand smaller, more focused models. I see brands using their own data to create models that serve limited, specific purposes.

In a world full of successful, limited-use models, consolidation is bound to happen. But the future of this industry will be built from the ground up, not from the top down.

Member discussion